Research

I am interested in the interaction of multiple learning pathways, both from an algorithmic perspective, as well as uncovering the multi-regional circuits that underlie learning. I am also interested in the neural dynamics that emerge over learning to support new task-relevant computations, with a focus on how feedback - sensory and internal feedback loops - can flexibly modulate effective cortical dynamics, and how these input-driven dynamics are learnt via multiple forms of errors.

List of Projects:

- Feedback control of Cortical Dynamics

- Data-driven models for control of biomechanical bodies

- Population dynamics in the cerebellar cortex

- Role of perceptual uncertainty in reward-driven learning

- Other projects

- Learning without plasticity

- Active learning for closed-loop experiments

1 Feedback control of Cortical Dynamics

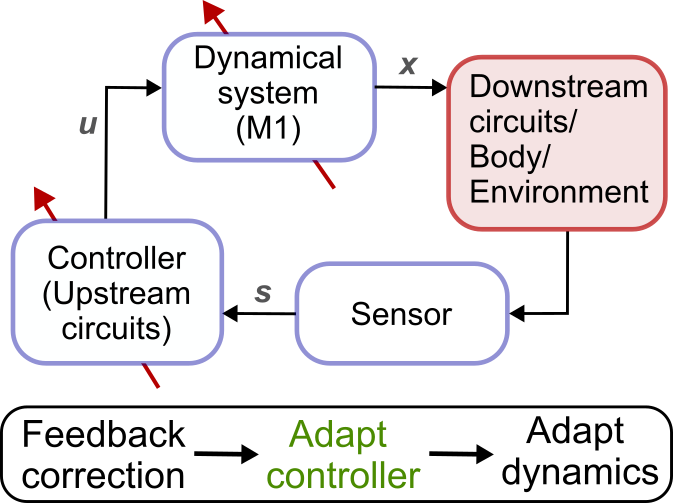

I use recurrent neural networks (RNNs) to model motor control tasks, including the use of motor BCI (brain-computer interfaces), where feedback about the output - either as “efference copy” and/or sensed state of the effector (limb, cursor, voice) - is a critical component to successful task completion. This feedback is needed to alter network dynamics in real time, in response to noise or external perturbations, to adjust the motor output. Moreover, learning in these networks may involve changes to the feedback inputs rather than to the recurrent network structure itself, especially when the dynamics are expressive enough. Borrowing insights from work on state-feedback controllers and modelling these “controllers” using neural network architectures, I study the implications of such an organization on observed neural activity structure and dynamical constraints on motor learning.

I use recurrent neural networks (RNNs) to model motor control tasks, including the use of motor BCI (brain-computer interfaces), where feedback about the output - either as “efference copy” and/or sensed state of the effector (limb, cursor, voice) - is a critical component to successful task completion. This feedback is needed to alter network dynamics in real time, in response to noise or external perturbations, to adjust the motor output. Moreover, learning in these networks may involve changes to the feedback inputs rather than to the recurrent network structure itself, especially when the dynamics are expressive enough. Borrowing insights from work on state-feedback controllers and modelling these “controllers” using neural network architectures, I study the implications of such an organization on observed neural activity structure and dynamical constraints on motor learning.

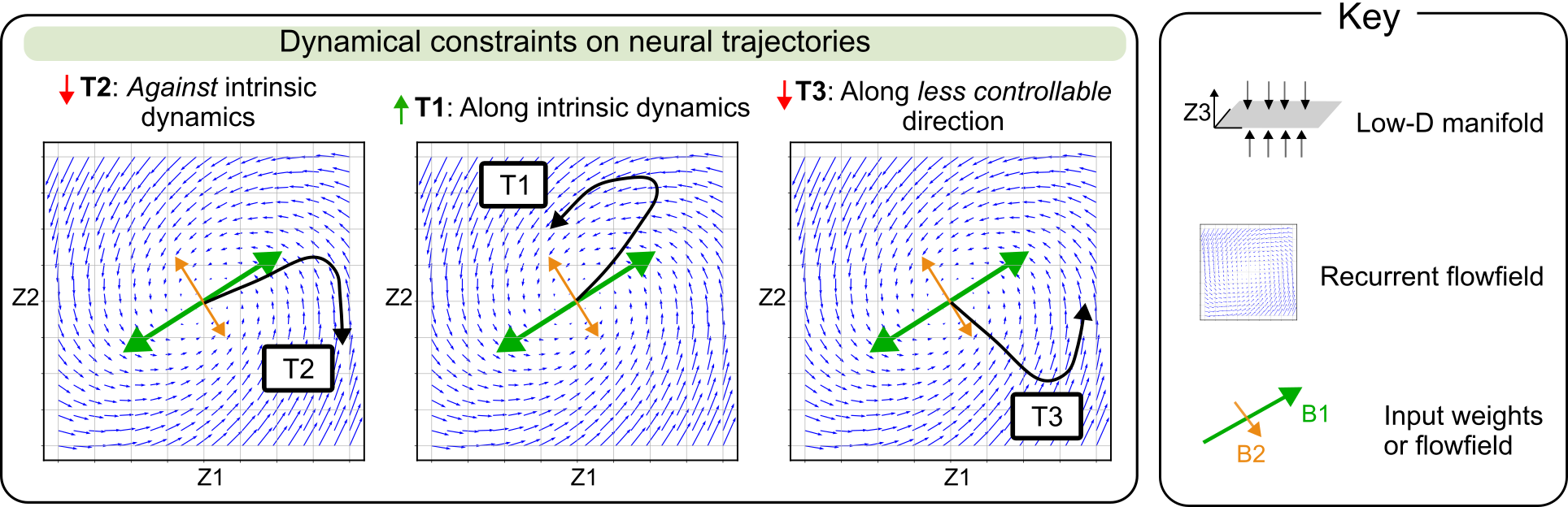

Previous work has shown that neural activity is often constrained to low-dimensional manifolds. We find that beyond neural geometry, structured dynamics on these manifolds further constrain learning on fast timescales. For motor circuits, these dynamics are shaped not just by internal recurrence but by low-dimensional control inputs based on continuous sensory feedback. By studying learning phenomena in a brain-computer interface (BCI) task and modelling plasticity at upstream controllers (such as cerebellar and premotor areas), we show that (i) feedback controllability, (ii) control bottlenecks, and (iii) input-driven flow-fields explain variable success and rates of adaptation to different BCI decoders, which we refer to as dynamical constraints on learning.

Previous work has shown that neural activity is often constrained to low-dimensional manifolds. We find that beyond neural geometry, structured dynamics on these manifolds further constrain learning on fast timescales. For motor circuits, these dynamics are shaped not just by internal recurrence but by low-dimensional control inputs based on continuous sensory feedback. By studying learning phenomena in a brain-computer interface (BCI) task and modelling plasticity at upstream controllers (such as cerebellar and premotor areas), we show that (i) feedback controllability, (ii) control bottlenecks, and (iii) input-driven flow-fields explain variable success and rates of adaptation to different BCI decoders, which we refer to as dynamical constraints on learning.

2 Data-driven models for control of biomechanical bodies

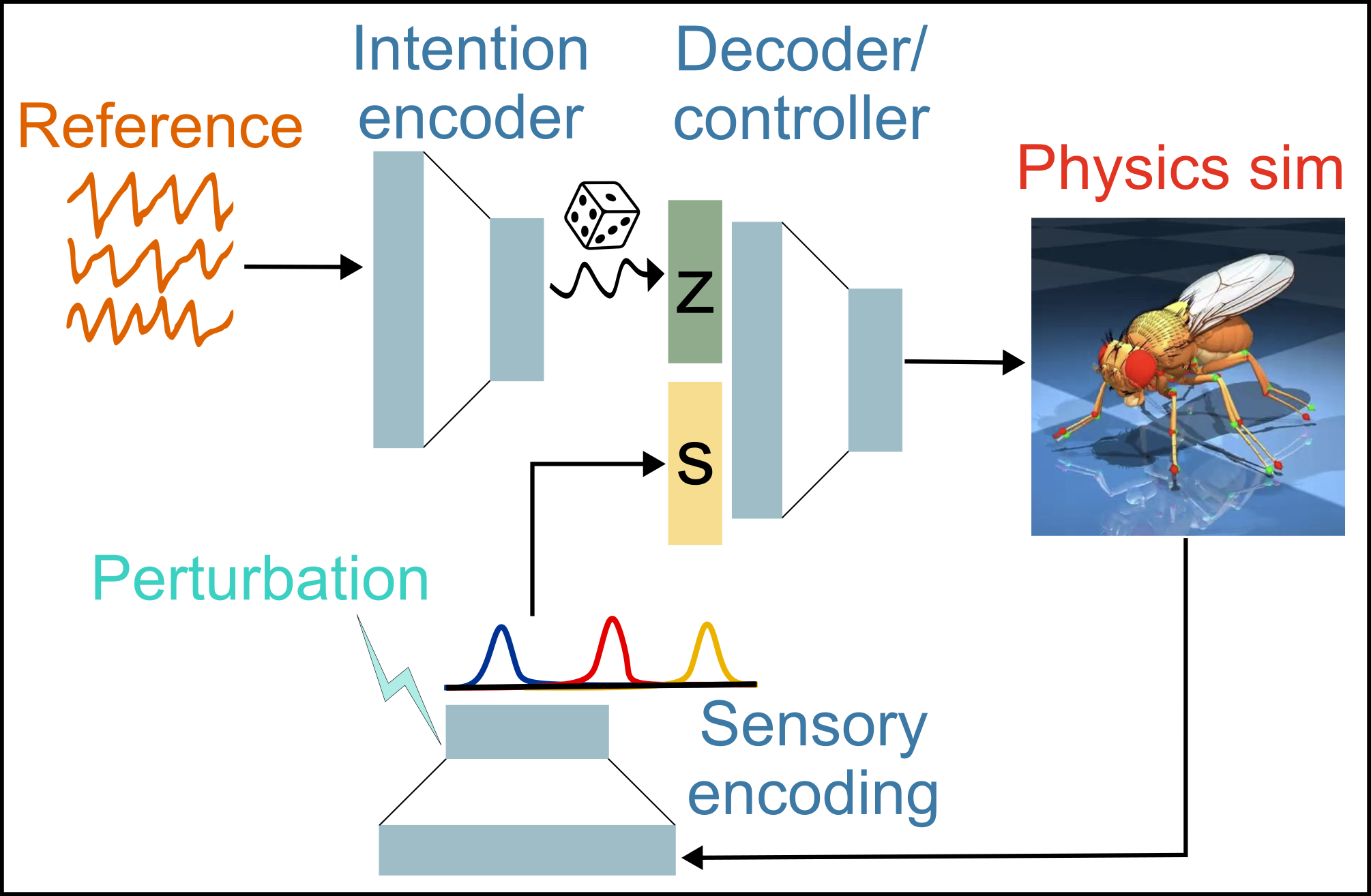

Neuroscientists have long studied central pattern generators (CPGs) that generate oscillatory activity patterns for rhythmic motor outputs, such as for limbed locomotion. However, when a walking animal encounters an unexpected perturbation (e.g. uneven terrain, being pushed), it must integrate feedback from proprioceptive sensory neurons with ongoing rhythmic feedforward commands to adjust, recover, and sustain walking. I am using a data-driven integrative modeling approach to identify neural computations underlying robust locomotion in Drosophila (fruit fly model system) - modular neural network controllers, with biologically grounded models of proprioceptive sensing, controlling biomechanical models simulated with physics engines, and trained using imitation learning to produce realistic 3D kinematics. By manipulating the activity of proprioceptive neurons in silico and quantifying altered kinematics in these closed-loop models, I am able to identify the role of different classes of sensory feedback (joint positions, joint velocity, contact) in shaping walking behavior. In collaboration with John Tuthill at UW, I am also comparing these predictions to behavioral responses of actual walking flies to optogenetic activation of sensory neurons.

Neuroscientists have long studied central pattern generators (CPGs) that generate oscillatory activity patterns for rhythmic motor outputs, such as for limbed locomotion. However, when a walking animal encounters an unexpected perturbation (e.g. uneven terrain, being pushed), it must integrate feedback from proprioceptive sensory neurons with ongoing rhythmic feedforward commands to adjust, recover, and sustain walking. I am using a data-driven integrative modeling approach to identify neural computations underlying robust locomotion in Drosophila (fruit fly model system) - modular neural network controllers, with biologically grounded models of proprioceptive sensing, controlling biomechanical models simulated with physics engines, and trained using imitation learning to produce realistic 3D kinematics. By manipulating the activity of proprioceptive neurons in silico and quantifying altered kinematics in these closed-loop models, I am able to identify the role of different classes of sensory feedback (joint positions, joint velocity, contact) in shaping walking behavior. In collaboration with John Tuthill at UW, I am also comparing these predictions to behavioral responses of actual walking flies to optogenetic activation of sensory neurons.

3 Population dynamics in the cerebellar cortex

The cerebellum - an important locus for motor learning and sensorimotor coordination - interacts reciprocally with the neocortex via disynaptic pathways. Cortico-pontine inputs enter the first stage of cerebellar processing as mossy fibre (MF) terminals, where expansion recoding at granule cells (GrCs) is suggested to transform these inputs into more learnable and separable representations. However, granule cell activity is critically regulated by a small but powerful inhibitory network of Golgi cells (GoCs), which have been implicated in both homeostatic scaling and regulating GrC activity sparseness, dimensionality and spike timing.

During my PhD, I studied the spatiotemporal structure of GoC network activity, along with its relation to the feedforward mossy fibre inputs, to resolve how a small electrically-coupled network may perform such varied computational roles.

3A Dynamics of electrically-coupled cerebellar inhibitory networks

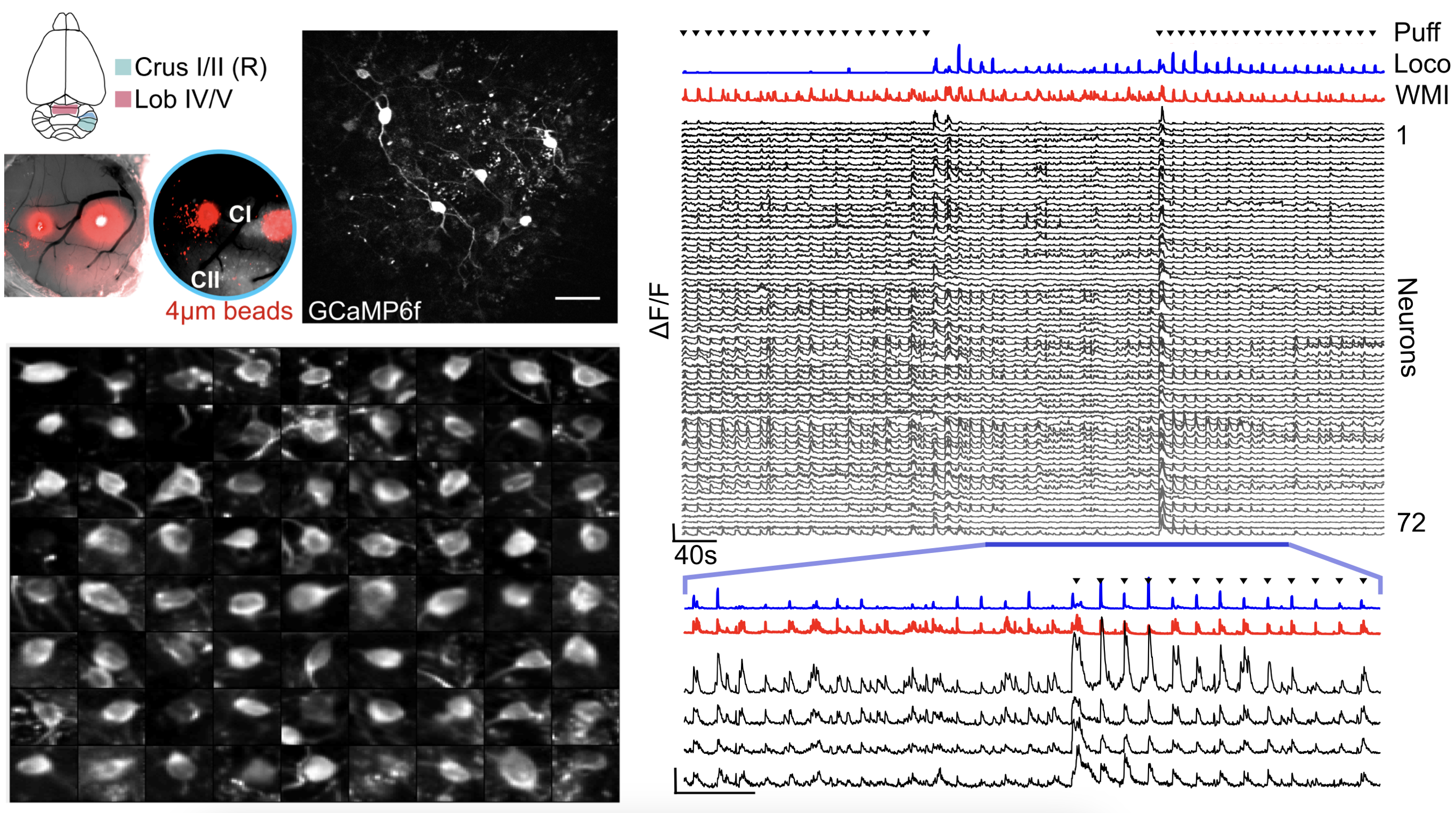

Two-photon imaging of Golgi cell network activity

To examine the organisation of inhibitory population dynamics in the cerebellar granule cell layer, I used 3D random-access microscopy to monitor the activity of sparsely distributed Golgi cells. Using this approach, we desribed multidimensional GoC population activity, with both widespread and distributed components, that makes it well-suited for modulating the threshold and gain of downstream cerebellar granule cells and introducing spatiotemporal patterning.

To examine the organisation of inhibitory population dynamics in the cerebellar granule cell layer, I used 3D random-access microscopy to monitor the activity of sparsely distributed Golgi cells. Using this approach, we desribed multidimensional GoC population activity, with both widespread and distributed components, that makes it well-suited for modulating the threshold and gain of downstream cerebellar granule cells and introducing spatiotemporal patterning.

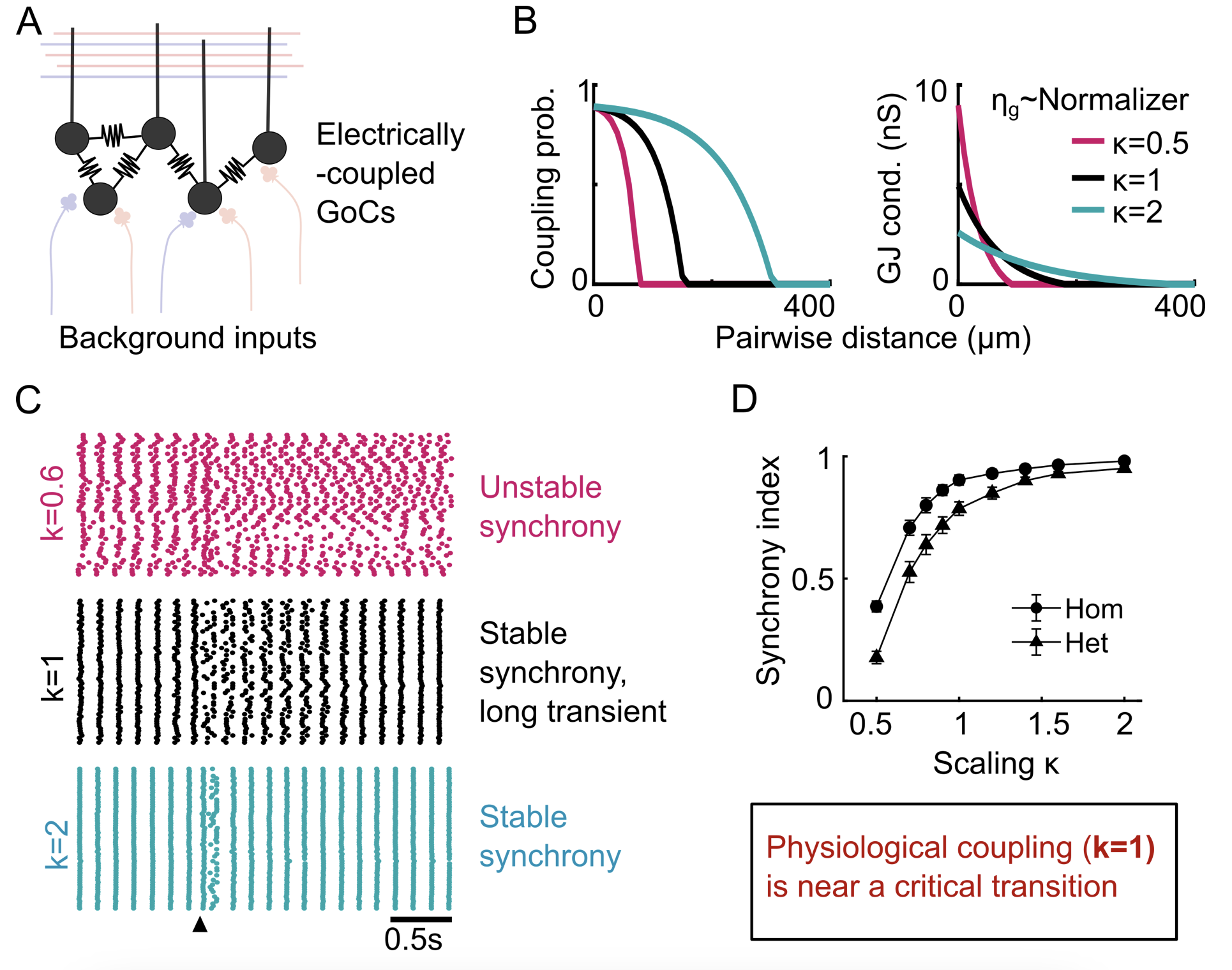

Dynamical regime changes with electrical connectivity topology

GoCs connect to each other via electrical synapses. We showed that network topology plays a role in determining the stability of synchronous spiking, as well as shaping slow dynamics. Moreover, experimentally-measured connectivity scale is close to a critical transition, resulting in long input-driven transients but ultimately stable synchrony. This allows us to posit new normative theories about the potential computational benefits of this dynamical regime.

GoCs connect to each other via electrical synapses. We showed that network topology plays a role in determining the stability of synchronous spiking, as well as shaping slow dynamics. Moreover, experimentally-measured connectivity scale is close to a critical transition, resulting in long input-driven transients but ultimately stable synchrony. This allows us to posit new normative theories about the potential computational benefits of this dynamical regime.

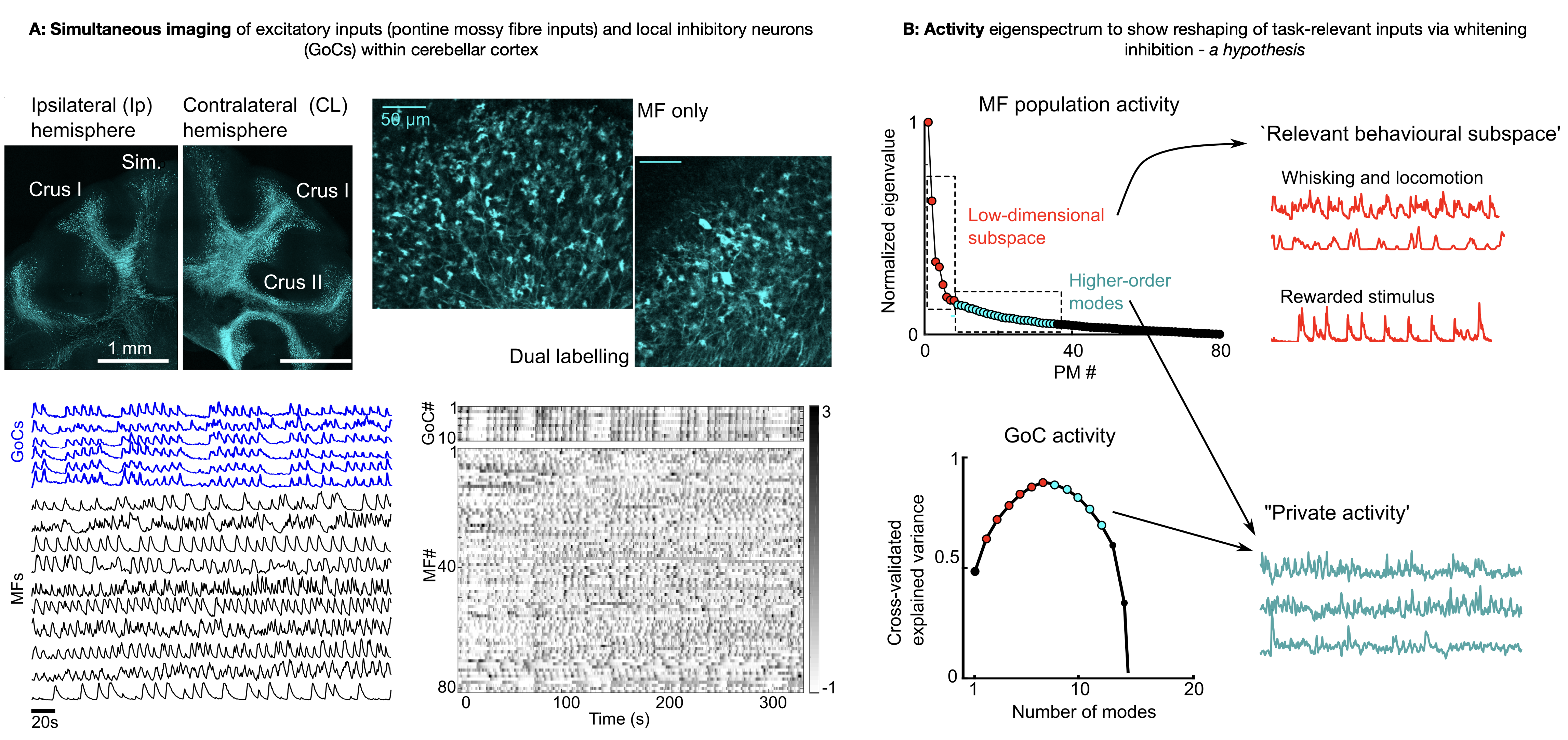

3B Sensorimotor transformation across the cerebellar circuit

To further understand the role of structured inhibition in cerebellar computation, I used 3D random-access microscopy to monitor the activity of mossy fibre (MF) inputs simultaneously with GoCs in multiple paradigms - spontaneous behaviors, passive auditory stimuli, and throughout the acquisition of an auditory Go/No-Go task. By examining the plasticity of MF representations as well as how the relationship between inputs and GoC network activity changes during active behaviors, we test several theoretical predictions and provide a conceptual framework for the role of inhibition in shaping cerebellar cortical representations. Indeed, this adds to the growing consensus that even the primary stage of cerebellar processing shows task-specific adaptation and efficient representations, rather than a uniformly high-dimensional code.

To further understand the role of structured inhibition in cerebellar computation, I used 3D random-access microscopy to monitor the activity of mossy fibre (MF) inputs simultaneously with GoCs in multiple paradigms - spontaneous behaviors, passive auditory stimuli, and throughout the acquisition of an auditory Go/No-Go task. By examining the plasticity of MF representations as well as how the relationship between inputs and GoC network activity changes during active behaviors, we test several theoretical predictions and provide a conceptual framework for the role of inhibition in shaping cerebellar cortical representations. Indeed, this adds to the growing consensus that even the primary stage of cerebellar processing shows task-specific adaptation and efficient representations, rather than a uniformly high-dimensional code.

4 Role of perceptual uncertainty in reward-driven learning

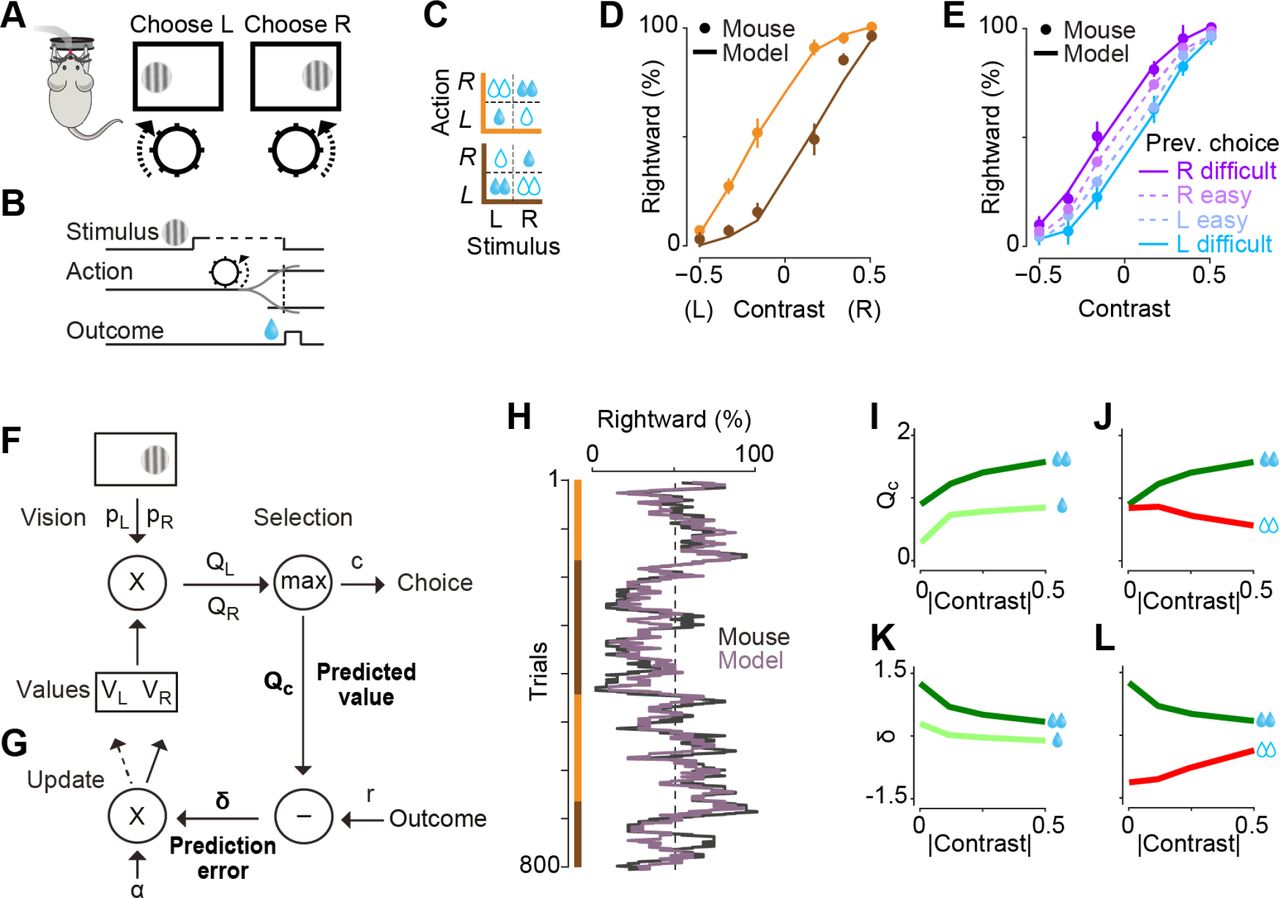

(Figure reproduced from Lak et al, Neuron 2020: Behavioral and computational signatures of decisions guided by reward value and sensory confidence)

(Figure reproduced from Lak et al, Neuron 2020: Behavioral and computational signatures of decisions guided by reward value and sensory confidence)

In a standard reinforcement-learning setting, expected returns are compared against true returns to modulate our learnt values and action policy. The “expected returns” or predictions are based on knowledge - or more accurately, our inference - of our current state, and the actions we have recently taken (“Q-value tables”). However, uncertainty about our state should be reflected as uncertainty of reward prediction error, and thus the amount of learning at that time. This state uncertainty often stems from perceptual uncertainty i.e. noisy or incomplete sensory information that we use for state inference. Lak et al studied learning of a perceptual decision making task in mice, where mice had to choose appropriate motor actions based on noisy visual cues in order to get water rewards. We modelled the improvement in task performance as a reinforcement-learning process, where we modelled value as a combination of sensory confidence and reward. Fitting these models to the behavioural data offered a parsimonious explanation of the animals’ sequence of choices and patterns of errors.

5 Ongoing Projects

Learning without Plasticity

Animals and humans show a remarkable ability to adaptively remap sensory flow into volitional movements, on timescales of seconds, minutes and hours. Much of this fast learning relies on activity-dependent processes - contextual and structure inference, input-driven reorganization of dynamics, and flexible association between brain areas. I am using network models and multi-region data to develop a theoretical and biological understanding of such learning without plasticity.

Active learning for closed-loop experiments

Recent technological developments are increasing our ability to flexibly and dynamically manipulate large neural populations at a fine resolution. Such flexibility in the design of the spatiotemporal pattern of stimulation leads to a very high-dimensional control problem, which is not amenable to be solved by manual search. The challenge is further exacerbated by the complex neural connectivity and dynamics, which yield highly nonlinear and time-varying responses even to simple stimulation inputs, such as an impulse. In collaboration with Juncal Arbelaiz at Princeton University, I will use systems identification and optimization techniques for both “control for learning” and “learning to control” neural dynamics.